So looks to me like a hardware error and I should get rid of both nvmes. Before I didn't have setup root mail correctly. I only noticed the issue after apache failed to start and I repaired the filesystem with fsck.ext4 -f. Smart Log for NVME device:nvme1 namespace-id:ffffffffĬopyright (C) 2002-16, Bruce Allen, Christian Franke, = START OF SMART DATA SECTION = SMART/Health Information (NVMe Log 0x02, NSID 0xffffffff)Įrror Information (NVMe Log 0x01, max 128 entries) SMART overall-health self-assessment test result: PASSED St Op Max Active Idle RL RT WL WT Ent_Lat Ex_Lat Optional NVM Commands (0x005f): Comp Wr_Unc DS_Mngmt Wr_Zero Sav/Sel_Feat *Other*

#SMARTCTL CHECK SSD HEALTH SOFTWARE#

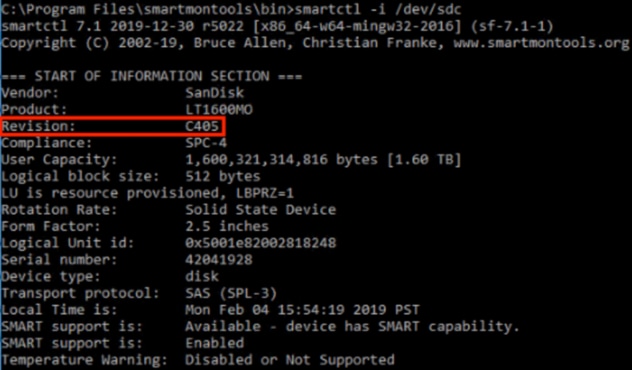

Optional Admin Commands (0x0017): Security Format Frmw_DL *Other* To gain insight into the health of your SSD, you can use a software tool called smartmontools, which taps into the Self-Monitoring, Analysis and Reporting Technology (SMART) system that’s built. Local Time is: Mon May 13 10:34:11 2019 CESTįirmware Updates (0x14): 2 Slots, no Reset required Smartctl 6.6 r4324 (local build)Ĭopyright (C) 2002-16, Bruce Allen, Christian Franke, = START OF INFORMATION SECTION = I tried to check the health of the devices with these commands, but they don't give me stats like "Reallocated_Sector_Ct" or "Reported_Uncorrect". blk_update_request: I/O error, dev nvme1n1, sector 68159504 nvme nvme1: completing aborted command with status: fffffffc blk_update_request: I/O error, dev nvme1n1, sector 452352001 nvme nvme1: completing aborted command with status: 0007 In dmesg I found this: nvme nvme1: I/O 311 QID 1 timeout, reset controller Or mdadm -re-add /dev/md2 /dev/nvme1n1p3Īnd after the resync it works for a day or two. I then just re-add the failed partition with mdadm -re-add /dev/md2 /dev/nvme0n1p3 One time it's nvme0n1p3 and next time it's nvme1n1p3. Md0 : active (auto-read-only) raid1 nvme1n1p1 nvme0n1p1 Md2 : active raid1 nvme1n1p3(F) nvme0n1p3 The /proc/mdstat file currently contains the following: It could be related to component device /dev/nvme1n1p3. It only disables 1 partition as you can see here: This is an automatically generated mail message from mdadmĪ Fail event had been detected on md device /dev/md/2. Here is the hardware/hoster it's running onĪlmost every second day mdadm monitoring reports a fail event and leaves the array degraded. I'm running debian stable with a 2 x nvme Raid 1.

0 kommentar(er)

0 kommentar(er)